Online Safety Act 2023: A British 2025 Dystopia of Internet Censorship

A look at Part 3 of the Online Safety Act in-depth, and why it is an absolutely appalling piece of legislation.

Ah, the damn Online Safety Act 2023. What a terrible bill.

It seems to have broken half of the internet, with every website requiring you to now age verify before you can use basic features of the internet. A VPN subscription has now effectively become a tax rise by stealth: a sort-of essential adblocker to have some respite from the nanny state.

The Online Safety Act 2023 (gov.uk guidance) is a piece of legislation that aims to protect kids and adults alike from harmful content on the internet, and has recently come into effect as of 25 July 2025. However, as you will see, it now only serves to hinder everyone’s use of the internet instead.

This bill shouldn’t have been one bill. It should have been 15 smaller bills, and that is why the implementation is completely daft.

From my observations reading the Online Safety Act is that the wording is extremely imprecise, but also a Category 1 service (such as Meta, X, Google, etc) is still, two years later, not defined in any guidance or legislation.

It seems that between the Online Safety Act, the continuing 5G rollout, the failing Royal Mail postal service, and their usual day-to-day work of protecting consumers, it is clear that the UK government wants to expand Ofcom, and regulate industry more than the previous Conservative government has done.

The bill is 353 pages! It’s so long that I’m not even sure lawmakers have read it fully and understood it. And I think that’s why it has so many holes in my opinion.

I have argued since my university days that censorship does not work. Censorship leads to more censorship. And the people who are determined to see the content they wished to see in the first place, will see it anyway, as they’ll use web proxies, VPNs, and Tor. What’s the actual point of all of this, when we have clear and obvious workarounds available to those ‘criminal users’…

The point is to make it harder for the kids to access the content, and indeed, this is the work of Michelle Donelan, the former conservative Secretary of State, and a parent herself.

She, of all people, should know that children get really curious. And the answer is not to stop them from crossing roads on their own and not teaching them how to cross a road. The answer is to educate children on safe internet use. And that responsibility lies in schools, and parenting, and both are currently under severe strain, so the responsibility seems to have been offloaded to businesses.

Here are my favourite parts of the bill.

‘Illegal Content Risk Assessment Duties’

It is unclear what illegal content is covered under this section. Or what illegal content even is, as it’s not defined in this Act. Sure, there are some obvious ones, and the act specifically lists them suicide and self-harm content, and the promotion of eating disorders.

But past that? Who knows. Because this bill doesn’t allow for nuance when it comes to ‘illegal content’.

For example, a whistleblower could leak content in good faith to a journalist, who will then proceed to post pieces based on what was shown. A journalist now may have to worry about prosecution under the Official Secrets Act or being used as an additional justification for prosecution under defamation Their disclosures are in the public interest. And this could undermine democracy.

What about Theresa May’s illegal pornography laws? Are they going to be enforced under the Official Secrets Act?

Because as we all know, under the The Audiovisual Media Services Regulations 2014, porn must follow home video rules or it’s illegal. Here are some of the acts that the these morons could potentially put you in jail for producing porn under the Online Safety Act:

BDSM

Physical restraint

Peeing on someone

Facesitting

Fisting

Christ. For queer people, enforcement of this section would basically look like the return of Section 28.

Democratic Importance

But it’s all OK! Because the Online Safety Act includes a provision requiring platforms to consider freedom of expression—especially when handling “democratically important content.”

This means we’ve codified a narrow form of free speech into law, particularly for Category 1 platforms like Facebook, YouTube, and X. These platforms must conduct risk assessments, publish transparency reports, and ensure political debate isn’t unfairly silenced.

In practice? Platforms will probably overmoderate all sides of political arguments just to avoid Ofcom fines. The law was intended to protect public discourse. But ironically, it may literally do just the opposite.

So yes, the law technically protects your right to talk politics online, even with people you strongly disagree with.

In practice, it’s now legally encouraged to engage with conservatives, whether you want to or not, and platforms might be reluctant to remove hate speech if doing so risks complaints to Ofcom.

Oh joy.

Providers of search services and their duties of care

Not content with banning porn, and also breaking online debate, they are also breaking search engines.

Search engines must ‘identify, assess, and mitigate risks associated with illegal content’, and they must ‘empower users to report content’.

Google can’t provide a decent search experience most of the time as they’re too busy breaking their core functionality with Bard AI. And now, search engines are expected to take on the role of moderators and moral arbiters, despite already struggling with delivering reliable results.

No thanks.

This only happens in Britain. Everyone else in the world gets the unfiltered experience. Now, global platforms must bend to Donelan’s wishes just to operate in the UK. The law is so poorly drafted, it’s hard to believe she consulted any operator of a search engine or small business before pushing it through.

Of course there will be no option to appeal to large organisations if they got it wrong, because there is no established process for users to appeal decisions made by large organizations, leaving no clear way to challenge potential errors. Of course this will be a logistical nightmare. Of course there is again not a single definition of ‘illegal content’ beyond ‘it’s common sense, it’s obvious’.

And how will this impact smaller engines like DuckDuckGo, that rely on search engines such as Bing to give them information? Will Microsoft be forced to pre-censor their search index before they give it to other players?

This also raises concerns about how smaller platforms and startups will manage compliance costs and vague obligations. The law’s heavy burden risks stifling innovation and competition, only further entrenching the monopoly with the big tech players.

This is a shitshow, and will only stifle innovation. Not protect the kids.

Journalistic Content

The Online Safety Act requires all platforms hosting journalistic content to self-moderate, including maintaining effective complaints procedures.

In principle, I agree with this. There should be minimum standards for websites to police their own communities and handle grievances responsibly.

There’s just one problem.

I don’t think it belongs in law.

And I don’t think that having a consumer complain to Ofcom about something I’ve said online is a useful use of time for either me, or Ofcom.

The internet is anarchistic and open by its design. Just ask Bo Burnham. It’s kinda the entire point of the thing, to spread information, opinion and emotions to others, without prejudice or judgement. Differences of opinion should be allowed.

This provision feels less about protecting speech and more about policing fact-checking and misinformation.

But how do you define misinformation? That was the challenge that these lawmakers were supposed to fix in two years and yet, all we got was this stupid, broken bill. All this will do is cause users and outlets to self-censor, and prevent true free speech - speech that is often uncomfortable or controversial, without it being a crime.

Aka, THE TRUTH.

Because of how this provision is written, hate crimes and harmful content get a different standard applied, leaving the boundaries of “safe” speech dangerously blurry.

Subscribe to Emily Chomicz today to get more quality investigative journalism!

Safety Duties Protecting Children

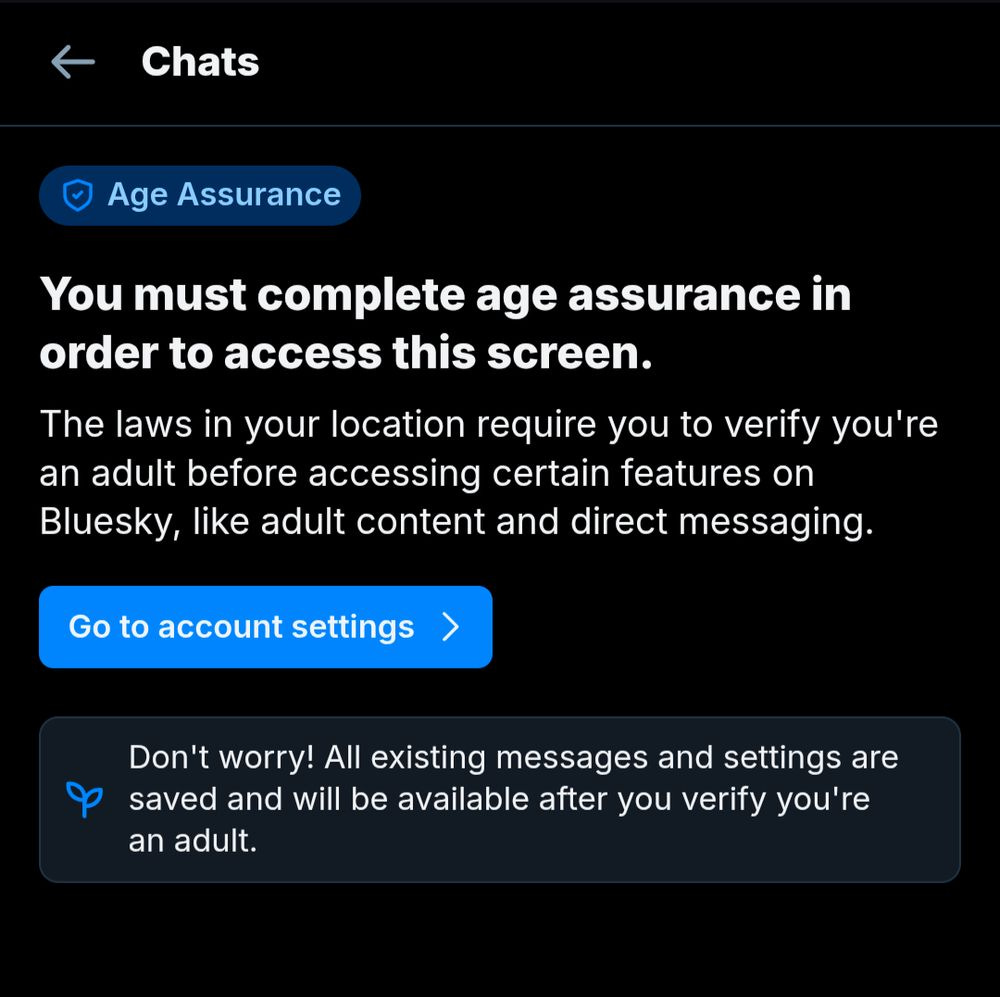

Here we go. This is the provision that broke the internet.

What the hell is this?

I can’t blame Bluesky for doing this. The age assurance provision in the Online Safety Act is written so broadly, it basically forces platforms to assume that every adult online is a potential threat to children.

Should we ban children from public spaces just because there’s a small risk of encountering inappropriate behaviour? Should we stop them from going to the park, the library, or the supermarket, just in case something uncomfortable happens, despite there being no widespread evidence of harm?

That would sound absurd in the real world. And yet, that’s exactly how the internet is being treated under the Online Safety Act.

It's clear what the intent was here: to prevent the kids from learning about dangerous, dangerous ideas. Which may be, for example, that trans people exist. Some interpretations of legality online could be used to silence trans voices or marginalised views, even if they’re lawful offline. Without clearer safeguards, this bill risks disproportionately harming marginalized and dissenting voices under the guise of ‘protecting the kids’.

I’m not self-censoring myself on the internet when I am talking to kids. And neither should you. I think it’s more important than ever for kids to know about topics such as politics, or safe relationships, or LGBT rights. The government doesn’t agree.

Kids have already gotten around it by showing photos in front of webcams for age estimation. It’s actually laughable.

If kids can get on Cool Maths Games in schools… then they can work out how to use a proxy or a VPN service. Kids in school prove that web filters are stupid, because in effect web filters become a challenge for kids to defeat.

User Empowerment Duties

This is the only part of the legislation that I like so far. It promotes user control over the content they see, requiring platforms to allow users to filter content related to suicide, self-injury, eating disorders, and hateful or abusive material concerning protected characteristics.

Requiring these controls for all registered adult users is a positive aspect, supporting content personalization and user choice. I think this is actually a step forward and one positive part of this act.

I think legally mandating content warnings and content filters as options for users is OK.

But why was there a giant pointless bill tacked onto this?

Kids aren’t stupid. They’re not helpless. If we focused on educating them to use tools like content filters, user controls, and critical thinking, instead of locking down the entire internet, we might actually build resilience, and not just a generation of broken kids told not to do something and due to their natural curiosity they get traumatised.

That would’ve been the smart approach - working with schools, parents, and educators. But instead, we got blanket restrictions, surveillance tech, and a default assumption that children, and adults, can’t be trusted.

Freedom of Expression and Privacy

And finally, at least for this analysis, there’s the matter of freedom of expression and privacy, which the Online Safety Act claims to protect.

Category 1 platforms are now required to carry out risk assessments, publish impact reports, and show how they are actively supporting these rights. On paper, that sounds like progress.

But here’s the dilemma:

Will this actually safeguard free speech, or will it become a shield for hate speech that masks as “opinion” and “being a concerned citizen”? After all, it’s just protected speech, mate.

Freedom of expression matters, it’s not freedom from consequences, or freedom to target others without accountability. The law doesn’t draw a clear enough line between harmful but lawful content and genuine public discourse, which leaves the burden on users like me to navigate this quagmire.

So yes, expression and privacy should be protected, but so should people. And right now, I’m not convinced the law strikes that balance fairly.

Conclusion

The Online Safety Act may have been written with good intentions, but in practice, it’s a complete and utter byzantine mess.

It doesn’t protect privacy, it undermines it with sweeping obligations that encourage surveillance and risk-averse overreach. The sweeping obligations encourage surveillance and data collection practices that fundamentally undermine user privacy.

It doesn’t foster innovation, it merely punishes it through vague rules and impossible compliance burdens.

And despite its constant invocation of "protecting children" it fails even there, opting for blunt restrictions over real-world education and more mental health support funding.

This isn’t just a flawed law, it’s fucked. Patching or amending it won't help, the entire foundations of the law are broken. Trying to fix it by removing a few sections would be like rearranging deck chairs on the Titanic.

The Act’s demands for surveillance and data collection, including collecting users’ identity documents, risk clashing with existing data protection laws like GDPR. This further complicates compliance and puts user privacy at risk. It’s just like Theresa May’s Snooper’s Charter all over again. Did they not learn anything from last time?!

The entire bill needs to be killed and rebuilt from the ground up, this time evidence-based policy by consulting with experts such as computer scientists, sociologists, and psychologists. That means splitting it into targeted legislation, such as for child protection, content filters, protection of news outlets, and so forth. It would likely be easier to pass in Parliament, too.

Here’s my independent, unsponsored recommendation to my readers - you should use VPNs such as ProtonVPN or NordVPN to protect yourself. They're not perfect, of course, but they offer a basic shield from our civil liberties in being violated in real life time.

Until the Online Safety Act meets its end under the legislative guillotine, that’s the only thing we can do to protect ourselves. And you should contact your MP today, to tell them how you feel about this bill.