The AI Ethics Dilemma in Creativity, Technology and Disability

The problems with artificial intelligence come from its novelty, and it will with time, as with all new technology, be accepted and used in ways that make sense after the craze dies down.

The AI Craze Is a Bubble

The problems we face with AI are as as old as technology itself. I remember in the 2010s when we as students were told to not plagiarise from Wikipedia in schools, and we were demonised for even mentioning the word because it was so unreliable.

After all, Wikipedia has had so many controversies, and we cannot ignore the bias in Wikipedia where editors are significantly more likely to be male, and from English-speaking countries, which plagued Wikipedia due to its open nature over the years. But now, with years of development, it’s so good that it’s brilliant for learning things.

The problems with Artificial Intelligence are societal. Just like the MediaWiki software that Wikipedia runs isn’t at fault for its issues, neither does using generative AI, or large language models. The tech is right now merely highlighting the 21st century problems of globalisation and democratisation of information access, where increasingly everyone can get to have an opinion, regardless of the opinion’s validity.

Computers have been used to aid humans forever in the creation of art. My favourite example of this is a classic — Shrek, which I cannot believe is now over twenty-four years old. This was a revolutionary leap forward when it came out, and it was made possible as they decided to go unconventional using SGI workstations on Linux. It’s clear from old Linux forums investing in cutting-edge technology paid off with Shrek.

The overwhelming problem with AI currently is the commercialisation, training, and marketing, as well as the proprietary nature of it, which impedes the spread and adoption of these technologies, especially the likes of ChatGPT, Gemini, and Copilot being particularly problematic. This is not a new problem in technology though, as demonstrated by the following examples:

High-bandwidth Digital Content Protection (HDCP) is a form of DRM that prevents people from pirating movies, but has ended up being a nightmare for anyone who uses a video capture card or an older computer. Pirates quickly solved that for us.

Microsoft famously got into an antitrust lawsuit by bundling Internet Explorer and therefore trying to kill off Netscape Navigator, which meant that in a rare intervention the US courts actually decided to separate out the Windows team.

The Dotcom bubble meant that there was a quick mass-hiring of IT as the internet started developing and a equally as quick mass-firing of employees as people realised the solution to the world’s problems doesn’t come from buying a domain and sticking some basic HTML on it.

That last one is particularly pertinent, as the Dotcom bubble has a lot of parallels to what we are experiencing now. Let’s break it down to see the economic similarities:

The economic shock of the pandemic is similar to the events leading up to the Black Wednesday crash when Britain exited the European Exchange Rate Mechanism in 1992 (ERM), leading to inflation up to 10% in 1990, which meant that anyone with a mortgage had to pay absolutely insane interest rates to keep the roof above their head (although of course, houses were much cheaper and more plentiful back then!)

This then lead to 2021 and 2022 to being crazy years for IT recruitment, where it felt like anyone with access to the internet and some spare time could retrain to become a software developer through a six-week coding bootcamp. Of course, this would prove to be no substitute for years of dedicated study to coding, and university degrees in Computer Science.

When Russia attacked Ukraine, we entered a mild stagnation (or if you’re being brutally honest, it’s basically a recession) in the UK. The effects of Brexit started to bite as we left the EU in 2021, leading to the economy being sluggish to this day as we are missing out on much-needed investment in the UK, and the 1.2% increase in National Insurance taxes on employers certainly isn’t helping, especially in the tourism and hospitality industries.

As a result, what we see is a technology bubble bursting in real time, right now, as the IT layoffs that started in 2023, continue into 2025. Even the Google CEO Sundar Pichai, is concerned about the huge valuations of companies, and the crazy expensive partnerships to try and build as many data centres as possible for AI.

This is clearly not a sustainable trajectory for tech, and this bubble will, as with all bubbles, eventually burst. It has led to some absolutely ridiculous government policies as well to try and fix the ailing British economy, as Labour is now promoting ‘AI attendance targets’ to school leaders, and Trump is finally being investigated by journalists for enriching the Trump family with cryptocurrency from dodgy sources.

This whole thing reminds me of the Beenz website in the Dotcom era where it was clearly an insane idea to let a private company run the financial system. Of course, Bitcoin et al are based on more solid decentralised principles, but crypto still suffers from the same systematic problems such as price shocks and speculation.

So much for the promises of independence from the wider financial system.

It’s all built on a fragile house of cards, and it’s going to burst sooner or later. This is a feature of capitalism, where we, for some reason, let the third richest man in the world, Jeff Bezos, fuck up the economy for a bit with his new AI startup. He’s going to have to learn how to be human eventually the hard way when the bubble finally bursts.

What Does it Mean to be Human?

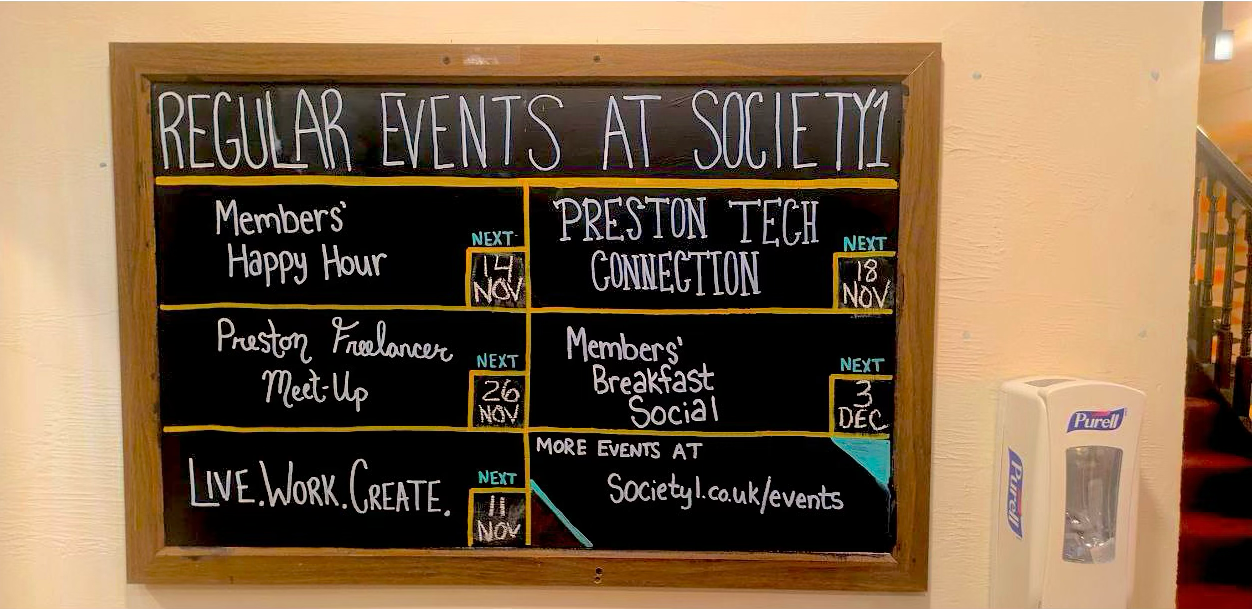

On Tuesday, I went to the Preston Tech Connection Meet-Up with my partner Alana, where Tom Stables, an academic lecturer in Digital Marketing at the University of Lancashire, facilitated a discussion and talk about generative AI as well as the use of large language models. In the room were industry professionals who had expertise in the creative arts, as well as industry professionals who used the technology for more logical, mathematical tasks such as software development — a good split of folks.

The audience of around thirty people was incredibly informed about the issues surrounding AI, and the majority of people present already had used it before in day-to-day work. Given the state of discourse around Large Language Models (LLMs), where it feels like admitting to the use of AI is akin to admitting to a crippling drug addiction, I do believe that rather than chastising the users of AI, it is a more productive use of time for users to hold companies accountable to their promises.

And this kind of meeting is a perfect example of how AI users try and understand the ethics in the technology they use and attempting to influence its research. Tom Stables’ AI-influenced art was an interesting thought experiment to the audience, and it really highlighted to me the fact we as humans naturally strive for technological perfection in our creations, which has kinda lead us into this mess.

As a photographer with a decade of experience, I could see this in how generative AI interpreted a camera pan too smoothly in one of his videos for example, as well as how it modeled a human body but looking closely it had a lack of texture resolution.

Seeing these things as a software developer has made me realise that the benchmarks we use to assess how “good” this technology is absurd, as AI companies’ obsession with photo-realistic perfection to an arbitrary metric causes bizarre results to happen through the uncanny valley. It’s clear that machines will never replace human intervention and machines can only ever assist humanity. Many creatives like me still use old computers and workstations after all for their limitations.

As a practical example, I asked ChatGPT to write a poem about pineapples for the purpose of this article. The source of ChatGPT’s inspiration is a mystery (a quick Google search seems to partially match a Facebook poetry page), but it’s easy to see that its responses sound sanitised, and devoid of depth or emotion despite a technically perfect grasp of English grammar, and are no substitute for a human poet who has actually touched and eaten pineapples before, unlike a lifeless machine:

The Pineapple’s Golden Crown

Golden armour in the sun,

Tropical crown, your reign begun.

A tang of sweetness, sharp yet fine,

Beneath your spines, a nectar divine.

From distant shores where breezes play,

You greet the dawn in bright array.

A taste of summer, rich and rare,

Juice like sunlight, floating air.

Oh pineapple, jewel of the feast,

Your golden heart tames savage yeast.

A paradox of thorn and grace,

A sunlit smile on nature’s face.

Slice by slice, your story told,

In amber flesh and liquid gold.

To taste you is to chase the sea,

To sip on dreams of ecstasy.

- ChatGPT 5

Again, the problems with AI aren’t necessarily caused by LLMs themselves but rather by humans chasing perfection with their assistance. I do think we are asking the wrong question with AI ethics, as the real question I believe we need to answer as a society is: what makes human art so special?

AI Will Not Replace Humanity

Tom Stables is clearly skilled at art, and I agreed with Alana in that it would have been impossible to tell if his work used AI generated content had we have not been told beforehand by him. While his work was not to my taste, it was a matter of preference as opposed to any real artistic defect, and it arguably enhanced what he wanted to do.

Compare and contrast this to the 2025 Coca-Cola Christmas advert, where the use of AI has cut the staff needed to produce the advert from 50 to 20, and has resulted in a terrifying monstrosity of what is supposed to be a fun time of the year.

It’s got all the hallmarks of bad AI use. You’ve got the scene at the beginning that is completely AI-generated. You can see how that’s resulted in a creepy as fuck scene where everything seems to have gone through a Gaussian blur and the grills on the truck appear to have been modeled by a five-year-old, before Santa’s hand hits the lorry’s bounding box. It’s like these creatives have failed Computer Graphics 101.

While watching this advert, it struck me that everything felt like it had a soap opera effect. The motion feels like it’s going through Vaseline and overall it gives a vibe that’s really off, and it contributes to the feeling that the video is being played slowly.

Just look at those penguins, for example. You can see the classic overuse of the over sharpening filter on the edges, to try and compensate for the lack of detail from the use of generative AI.

Even the storyboarding is all over the place, with close-up shots of animals rather than the people touched by Christmas, presumably because AI would not do well with people’s faces, and I can only imagine the backlash being even worse with AI-generated people. Now that would truly be a form of replacing human extras!

The magic of the 90s version of the advert is just completely gone, where it was clear that this was a product of love by the creative folks at Coca-Cola who wanted to bring magic to Christmas by stretching the limits of what can be considered “reasonable company expenses”, and isn’t that truly the spirit of Christmas after all?

This whole thing seems like it was a rush job for 2025, rather than a statement of what technology can do, which I feel was the intended message. This is what happens when AI is proposed to solve a problem i.e. the lack of budget and staffing for this advert, as opposed to allowing teams to use AI in order to enhance their work by saving them time and effort.

The problems of generative AI are not the problem of the technology, but the application of it. Using it to replace human output entirely and relying on the machines to do all the work will result in a bland replication of what has been done before.

There’s no way to “fix” the machine, as the machine is just doing what the user asked it to do. The problem here is purely down to corporate laziness and greed.

Real-World Issues in Smart Technology

These developments in how AI is used have dangerous real-world implications, such as for me like me, who suffer from disability. I want to use accessibility features in technology that are powered by artificial intelligence, but I find it impossible to ignore the ethical concerns behind the latest technologies.

I find myself therefore in an impossible position, where I hold myself to an impossible standard as I will never be able to address them all. Annoyingly, it’s simple things, like installing smart bulbs and smart switches in my house so I don’t have to get up when I am facing chronic pain, that are proving to be the most problematic in my experience. I don’t want it sending my data to the cloud, or inadvertently support greed.

I absolutely detest what OpenAI and ChatGPT have become. I cannot support or justify OpenAI’s choice to allow generation of erotica for its users, and until recently Microsoft was actively directly supporting Israel’s war crimes in Gaza through Azure (and by extension, Copilot), meaning that there is no good solution for LLMs unless you roll your own, which takes time, effort, knowledge, and money on hardware.

I love the idea of Copilot’s AI File Explorer, for example, and it would be a massive help for me as someone with ADHD to simply describe what file I’m looking for, as opposed to navigating through the mess I’ve created over the years. But especially as a journalist who is privacy-conscious, there is no way on earth I can trust Microsoft.

As someone in a background in Computer Science, I understand that I can use open-source software in order to control things in my home, by setting this up on the Queer Love Riot server as a virtual machine, as in the household we live in, we have decided that putting an Alexa in is out of question due to its spying, with other companies’ solutions having the exact same problems. However, it’s become a catch-22 where I need the technology already in place to work on a better solution.

Therefore, use of ChatGPT as a de-facto intern of Queer Love Riot is out of necessity, not out of choice. There are often now times when I cannot sit or work on a computer for long periods of time, and I struggle to keep my hands on the keyboard. ChatGPT in this case, for me, acts more as an autocomplete and an accessibility aid more than a creative force, as I often use it to write repetitive code that has no artistic value.

I’m now using Apple Intelligence instead for simple tasks such as proofreading, and so far it seems that Apple has got the most sensible policy out of the options available, and it’s clear that they have put a lot of effort into making AI assist, rather than try and do all the work for you. It’s like having Grammarly built into the OS, and the fact the models are working on-device mean a lot less privacy concerns, so they got that right.

Even Apple has fucked up, however, by using publicly available websites for training data, and there is currently a lawsuit against Apple for unauthorised use of books to train their models. Apple, you were so close to creating something ethically decent!

In Apple’s defence, regulation is still very much developing. I can see the argument that being on the bleeding edge means there isn’t really a playbook or set of rules to abide by, which means that firms are often encouraged to break ethics to keep up with the competition or lose funding entirely as AI is not currently profitable at all.

Conclusion

We will get there with AI. I think that many of the problems with AI will be solved in time, and we will move to the next controversial technology. Technology is cyclical, and these things come and go. After all, even technologies such as Siri on the iPhone are still divisive, and I remember how bemused people were when Apple released the iPhone 4S with the main selling point being a crappy voice assistant. It got better.

We live in some extremely difficult times that involve political change, a global economic economic slowdown, and ineffective governance, with the threat of the far-right looming above us. However, my personal belief is that as long as people keep trying to shape the future, we too, will get through this technology bubble, even if it has to burst first before it gets better.

Reporting by Emily Elżbieta Chomicz, investigative reporter for Queer Love Riot.

Your perspective on the disability and accessibility catch 22 really hits home. The fact that you need working tech to build beter solutions while not wanting to support unethical systems is such a real dilemma. The dotcom bubble comparison makes a lot of sense too, we're definetly seeing the same pattern of overhype before the inevitable correction.